By Using ChatGPT, Are You Putting Your Company at Risk?

As of May 2024, ChatGPT has a whopping 180.5 million users. The site generated over 1.63 billion visits in February 2024, and took only 3 months to hit 100 million monthly active users, making ChatGPT the fastest growing app in the history of web applications.

No one could have predicted the success of ChatGPT, not even Open AI. No wonder companies and regulatory entities haven’t had a chance to anticipate the phenomenon and define ahead policies to frame how employees should or should not use ChatGPT. While some companies have banned the use completely, some are trying to find the right guidelines to allow their teams to leverage the power of AI and make a safe use of ChatGPT for work purposes.

Like millions of others, you might have been using ChatGPT to get work done faster… By doing so, have you put your company at risk? 😱

To Be Stored or Not to Be Stored, That Is the Question

ChatGPT's policy is pretty clear about the fact that the app does not store any of your prompts, making each of your interactions with ChatGPT isolated from the rest of the other users. It means when you start a conversation with ChatGPT, it doesn’t remember what you discussed previously and of course you cannot query information coming from conversations with other users.

The downside is that you might have to systematically specify that you want answers written in UK English instead of US English, the tone you prefer, etc. On the plus side, it confirms ChatGPT interacts with you like it’s the very first time you try the bot.

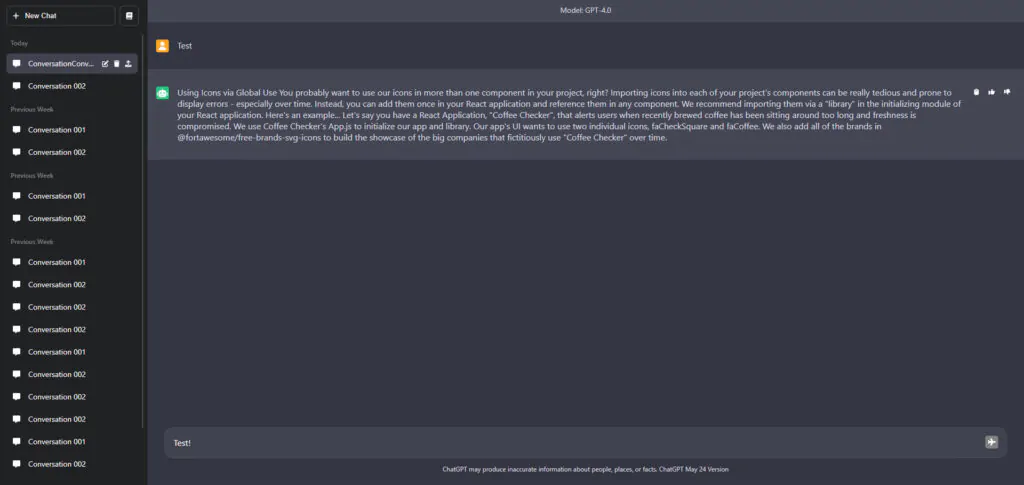

You might be wondering: “Hang on, if ChatGPT doesn’t retain any information, how come I can see all my chat history on the left hand side of the screen?”

The chat history on the sidebar is managed by ChatGPT’s UI. The user interface stores all the messages in a thread, but not in the model/ LLM itself. This way, when you return to a previous conversation and submit a new chat, the prompt is sent with the previous context, so ChatGPT can analyse your enquiry with the previous exchange, and you feel like you are resuming the conversation without a gap in context.

So is it all that ChatGPT stores? Well, it depends. In some cases, ChatGPT might store information for “training purposes”. Similar to when your phone conversation with a call centre is recorded to train agents, OpenAI wants to learn from your interactions with ChatGPT.

Why might ChatGPT need to store information?

With user consent, OpenAI may utilise anonymised data to enhance their LLMs. This capability implies that data, while not personally identifiable, could be stored and used internally, thus posing potential security concerns.

ChatGPT uses data to train their AI on a large scale, focused on extracting and generalising patterns in the data, not retaining or recalling specific user inputs. The training process involves adjusting the model’s parameters based on aggregated data from many interactions to enhance its contextual understanding, language and cultural nuances, performance, user personalisation, and detection of sensitive topics for appropriate response delivery across various topics for a smarter, safer, and more reliable AI. While we know that it is not always right, training the data helps the bot become smarter for future uses.

Is it Safe to Use ChatGPT for work?

Even if, by design, ChatGPT will not share or reveal someone’s interaction to another user in subsequent interactions (the model does not retain specific details from individual sessions to recall in later sessions with other users), here are 2 key risks you need to consider:

- Suppose your compliance regulation requires your data to not get outside your country/region. In that case, the information that will be used for training might be stored across various data centres around the world and may represent a breach in confidentiality.

- While OpenAI is committed to high standards of security with its services, if a data breach happens at any of those data centres, your personal data will be at risk.

The Model Improvement Toggle

If you are using ChatGPT (either a paid or a free plan) without fiddling with your settings, you might have missed a critical option for maintaining your data’s privacy in the Data control menu: the “Model improvement” setting.

- Web: Profile icon at the top right > Settings > Data Controls > “Improve the model for everyone."

- iOS: Tap the three dots at the top right > Settings > Data Controls > “Improve the model for everyone.”

- Android: Tap the three horizontal lines at the top left > Settings > Data Controls > “Improve the model for everyone.”

Model Improvement: ON or OFF?

By default, “Model improvement” is enabled and you allow ChatGPT to use data from your interactions to refine its algorithms and improve response accuracy. This helps the technology develop, but it also means that OpenAI can potentially store your data.

Several organisations, including Samsung and JPMorgan, have banned the use of ChatGPT to their employees due to concerns over the potential sharing of sensitive information. Their decision was made to avoid the risk of confidential information, such as source code or financial details, being captured by Open AI and stored to train the model.

On the other hand, other companies like Microsoft and Salesforce have embedded some ChatGPT functionalities in their various processes, allowing their employees to use it while following critical guidelines around anonymisation, ethical use, and security.

If your prompts to ChatGPT are not carefully anonymised, you might want to consider turning Model improvement off, to avoid storing any potentially sensitive information.

It's important to understand that this change will not affect data previously collected unless you specifically request its deletion. The sooner you apply this setting, the better control you have over your data.

Let’s help each other

Considering the speed at which AI is currently evolving, it’s quite daunting to keep up and innovate whilst containing risk to a minimum. It simply goes too fast! So let’s help each other and share our findings as we progress on our AI journey. Feel free to post your comments or any questions you might have, we’ll be happy to look into them and see how we can help.