Smart Enough to Be Dangerous: If AI’s Doing the Work, What Are You Doing?

AI promises to handle the boring stuff. Automate the admin. Write the emails. Build the reports. Suggest the next-best-whatever so we could finally focus on the “valuable work.” You know, the strategic, impactful, deeply human things we keep saying we’d do, if we only had the time.

And to be fair, AI delivers. Sort of.

Now we can get dashboards in seconds. Code in minutes. Outreach campaigns that sound polished, even if they were typed by a sleep-deprived junior who just discovered ChatGPT. It’s impressive. It’s efficient. At ProQuest, we think it’s also quietly reshaping how people work, and not always in ways they realise. So if AI’s already doing the work... what’s left for you?

The Illusion of Intelligence

Let’s be honest. Artificial Intelligence feels smart. It can do in seconds what used to take hours. It gives answers that sound polished and confident, like it knows exactly what it’s talking about. But it isn’t actually thinking. It pulls from huge amounts of data, spotting patterns and similarities to piece together responses that sound right. And when something sounds right and shows up instantly, it’s easy to trust it without thinking twice.

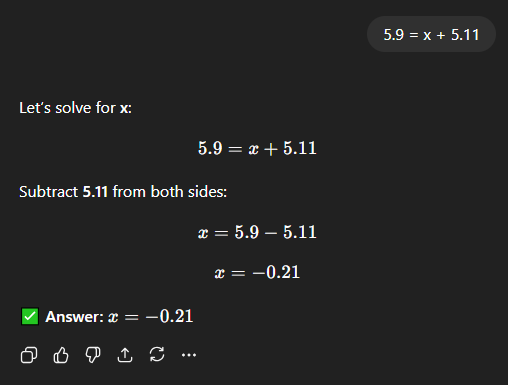

We’ve personally experienced it often. It can hallucinate facts, invent sources, misquote laws, and just recently, completely mess up a simple algebra equation.

The correct answer is supposed to be 0.79. What it gave instead wasn’t even close, but it said it like it was.

It’s not trying to lie, but it’s still wrong. That’s why it’s on us to stay sharp. AI won’t second-guess itself. So we have to.

It’s Not About Losing Jobs

The real risk with Artificial Intelligence isn’t that it will take your job. It’s how it can slowly take away the moments where you'd normally stop and think, and in turn, affect what you do.

You’ve probably seen this already. An AI-generated answer gets dropped into a deck without a second glance. Code gets deployed because “it compiled fine.” A chatbot suggests a response and someone hits send, trusting it got the tone right.

None of this is malicious. It’s just convenient. But the more we skip the “does this make sense?” step, the more we lose our edge. Sharpness, curiosity, and instinct start to fade.

We’ve seen what happens when that mindset scales.

In 2024, Klarna made headlines for cutting thousands of jobs, shifting heavily to generative AI. The promise was speed, scale, and savings. But the cracks showed fast. AI could handle volume, but not nuance. Complex support queries stalled. Customers lost trust. Within months, Klarna started rehiring the roles it had just automated. The tech didn’t fail. The thinking did.

We’re seeing a similar trend in development. AI has made it easier than ever to generate code (vibe coding) quickly. It helps get ideas moving and takes the pressure off the blank screen. But speed still needs structure.

Behind every AI-generated function or script, someone needs to understand what it does, ensure it’s secure, and confirm it works in the real world. Human expertise hasn’t gone away. If anything, it has become more important.

AI can write the code. But experts still make sure it actually works.

So What Do We Do?

Let’s not pretend we’re not using AI. We are. And we should.

We believe in embracing it in practical, high-impact ways. Agentforce powers autonomous agents that help our customers automate case resolution, schedule field jobs in real time, and build smarter service workflows every day.

And behind every one of those wins? A human. Someone checking the output. Adding context. Asking the follow-up question the system never could. And someone improving the system itself.

That’s the mindset to protect. Use AI to move faster. Automate the boring stuff. Get the draft done. Just don’t forget the part that still needs you.

Here’s how to lead with AI, without losing your edge:

- Build AI into workflows, not in place of them: Use Artificial Intelligence to support your teams, not replace them. Automate the repetitive work, but keep people involved where judgment, nuance, and customer experience still matter.

- Set review checkpoints: Make human review part of the process. Treat its outputs like drafts, not decisions. Especially in customer-facing work, accuracy and tone still need a final check.

- Invest in Artificail Intelligence literacy across the business: Help your people understand how it works and where it can go wrong. The goal isn’t just usage, but smart usage.

- Balance AI adoption with capability building: Don’t let speed come at the cost of development. Make sure your teams still build the core skills that Artificial Intelligence can't replace: problem solving, communication, and critical thinking.

- Assign ownership for output: If it is part of the process, someone still needs to be accountable for the final result. Define clear responsibilities so quality doesn’t slip through the cracks.

- Track the impact: Watch what it is improving and what it might be weakening. Monitor quality, customer satisfaction, and team engagement. Don’t just measure productivity. Measure outcomes.

- Lead with clarity, not hype: Adopting Artificial Intelligence sounds bold, but implementation is where it matters. Be clear about where it fits, why you're using it, and what you expect it to improve.

Keep using Artificial Intelligence. Let it take the admin, the drafts, the grunt work. But the thinking? That still needs you.

The best leaders won’t be the ones who used the most AI. They’ll be the ones who used it well and built teams that stayed sharp while moving fast.